Literal

Helping teens discover new stories with the power of AI

Summary

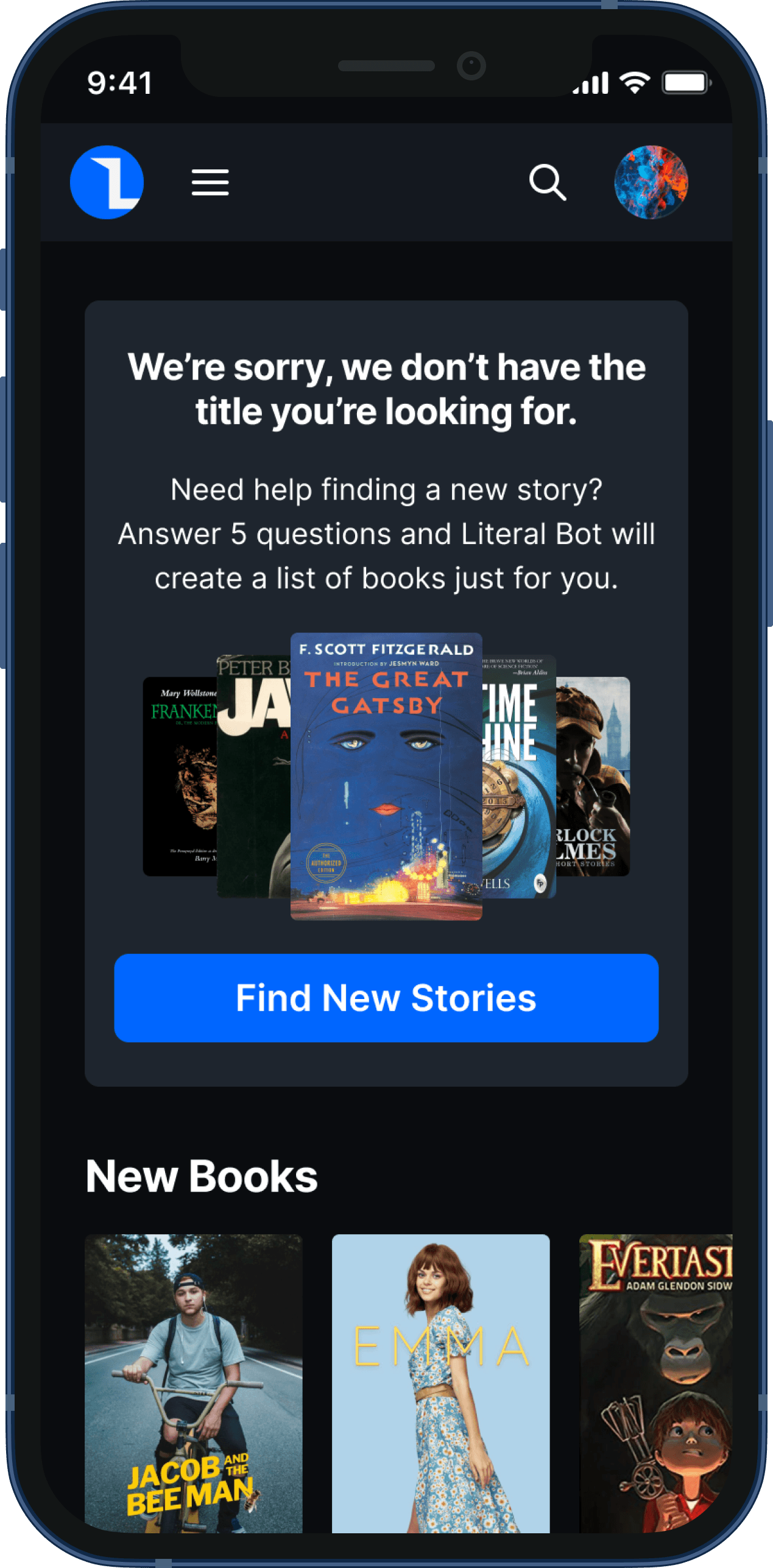

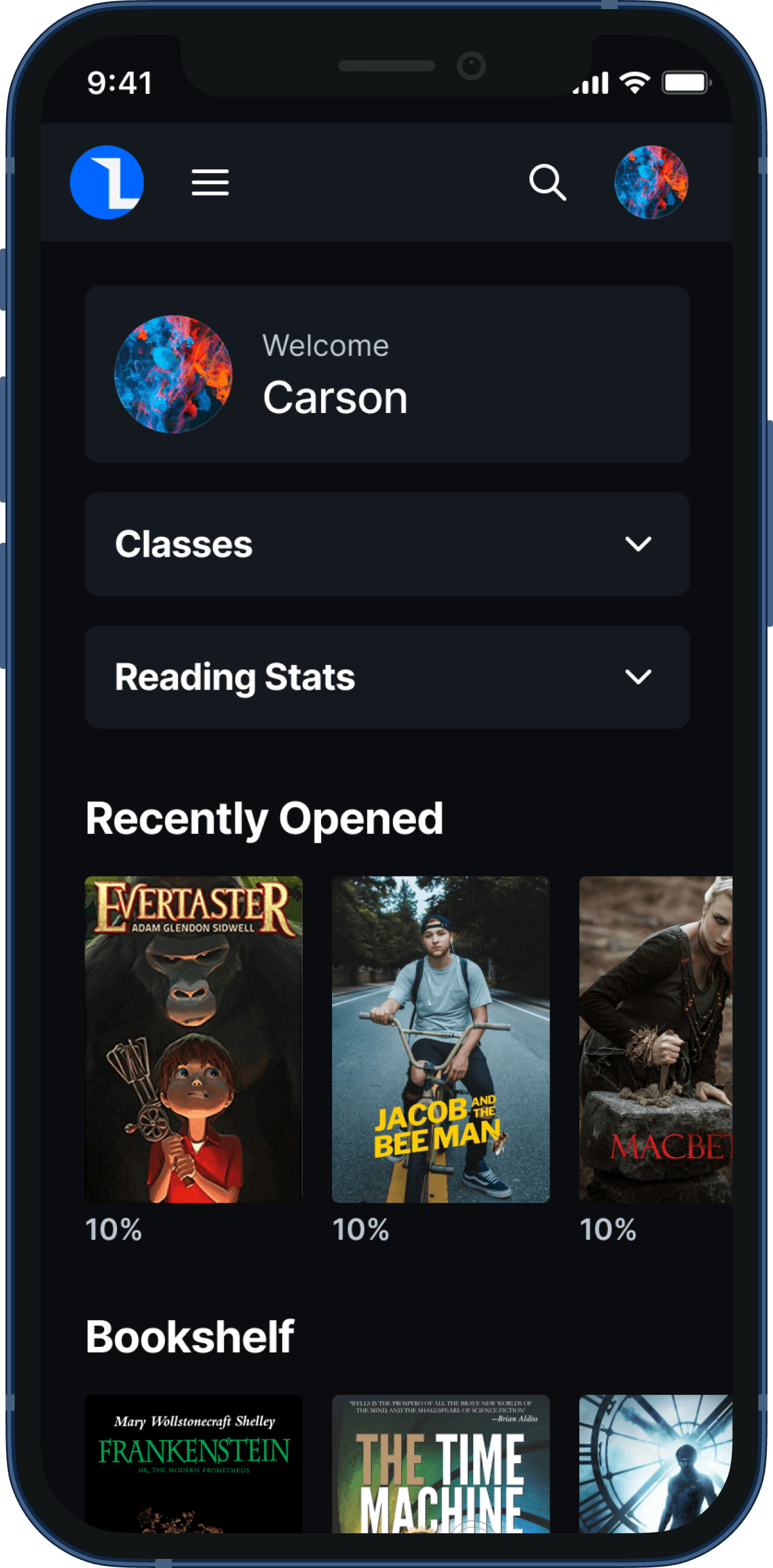

Literal was created to develop a new generation of engaged readers. While teenagers spend hours on their phones but minutes reading books, Literal aimed to change this by creating a reading platform that felt as natural as texting. When students couldn't find familiar titles in our library, they stopped looking for alternatives. The solution? Leverage AI technology to connect students with thousands of great books in our existing library.

Roles

Lead UX designer

Team

UX designer (me)

Product manager (CEO)

Engineers

Deliverables

User flows

Job stories

User story map

Wireframes

UI Design

Prototype

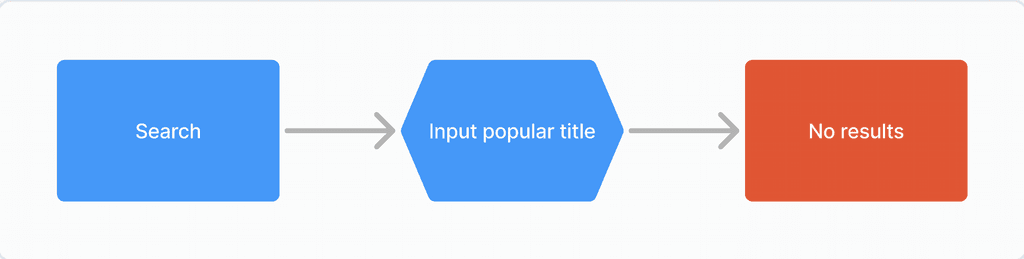

Students Needed a Path from Familiar Books to New Books

During our conversations with teachers, they shared a consistent pattern in student behavior. Students would start by searching for book titles they knew. When these familiar titles weren't available, most students simply stopped searching. The teachers also shared a key insight: students were most likely to choose and finish books that connected with their personal interests. Without any assistance in this regard, our library remained unexplored by students.

A small library and limited budget pushed us to find creative solutions

The team faced a clear challenge. Literal wanted to provide all the popular titles students were searching for, but doing so required time and money—both in short supply. A different approach was needed. Instead of expanding our library, we needed to help students discover the great books we already had. But how?

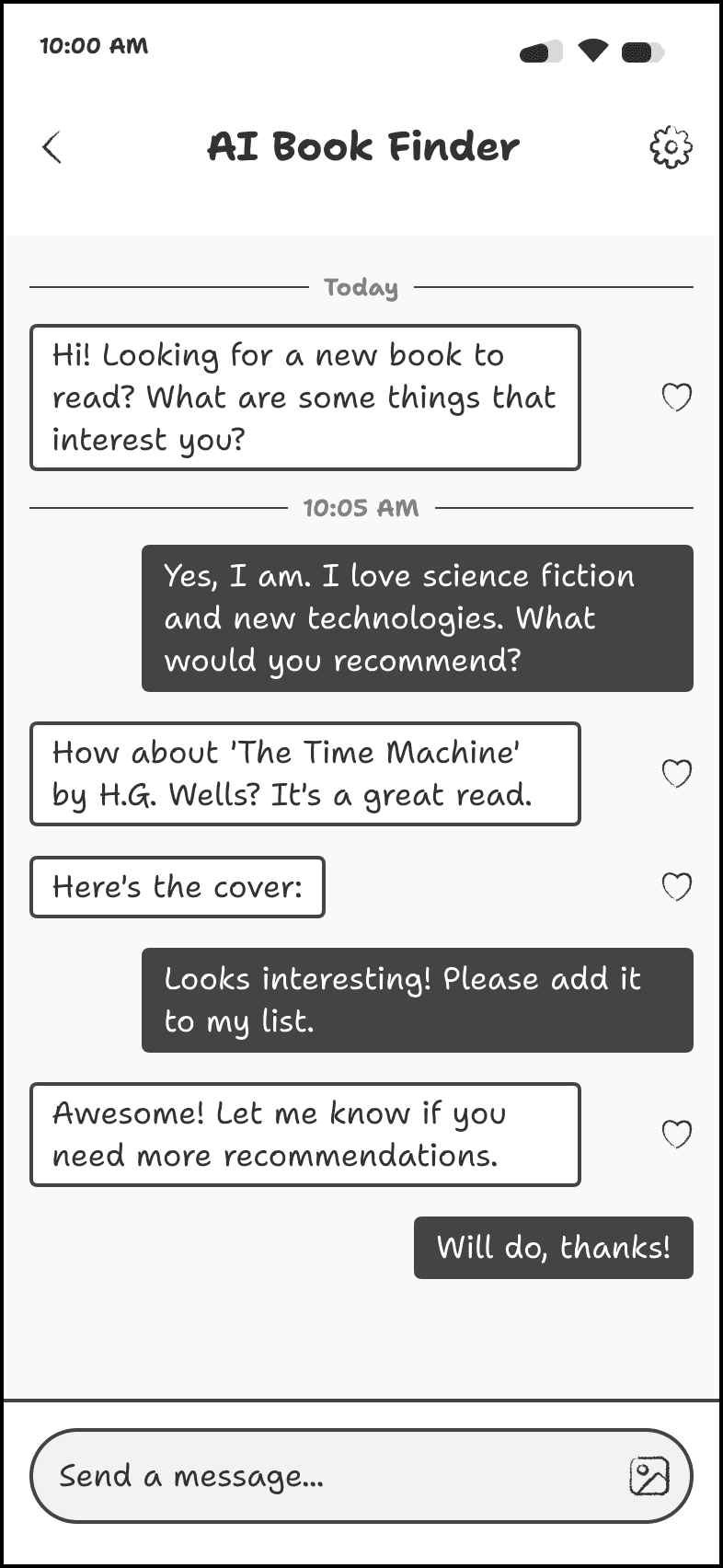

The release of OpenAI’s ChatGPT sparked an idea

As the team was grappling with this problem, the team learned of OpenAI and ChatGPT. This sparked a question for us: could we use OpenAI’s API to analyze the books in the Literal library and match readers with books they'd likely enjoy?

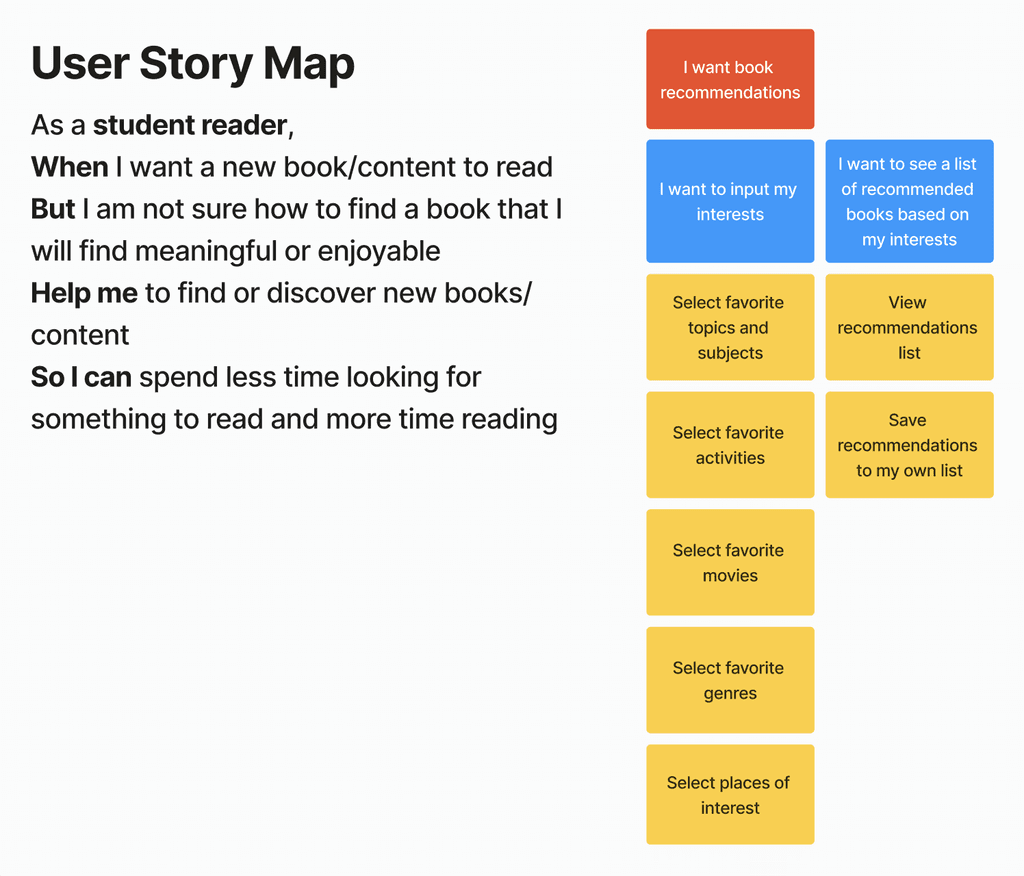

Mapping and prototyping helped us clarify and validate our idea

Building a feature with an emerging technology is inherently risky. Before committing substantial resources to development, we needed to pressure-test our thinking. We started by creating user story maps to visualize the ideal discovery experience, while simultaneously cataloging our library's book categories to understand the recommendation landscape.

The technical validation came next. One of the engineers built a prototype that used OpenAI's API to analyze our book collection and generate recommendations based on user inputs. While the initial results showed promise, they also revealed areas for refinement. These early tests gave us the information and confidence needed to move forward.

Real Validation Required Real Code

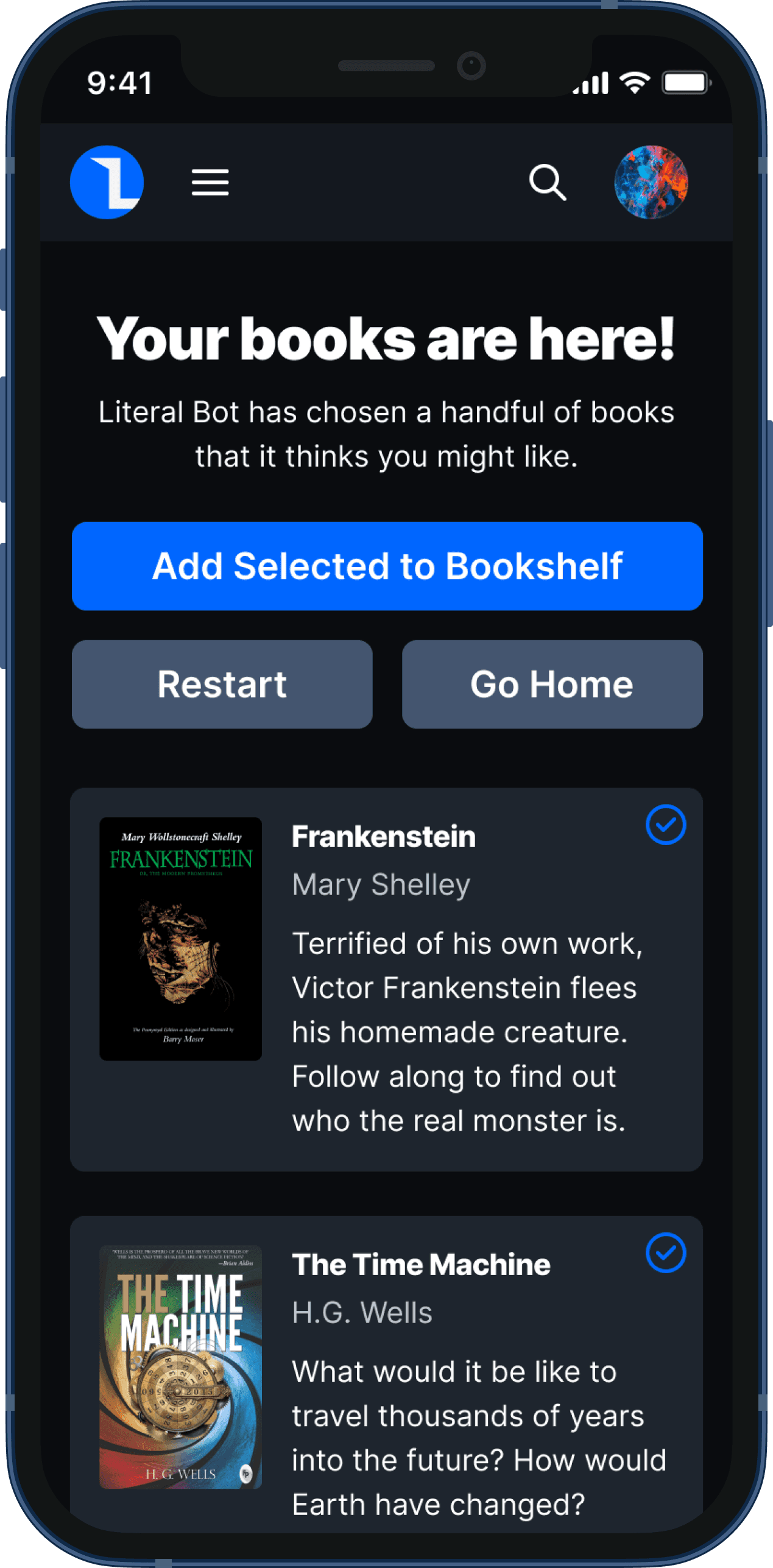

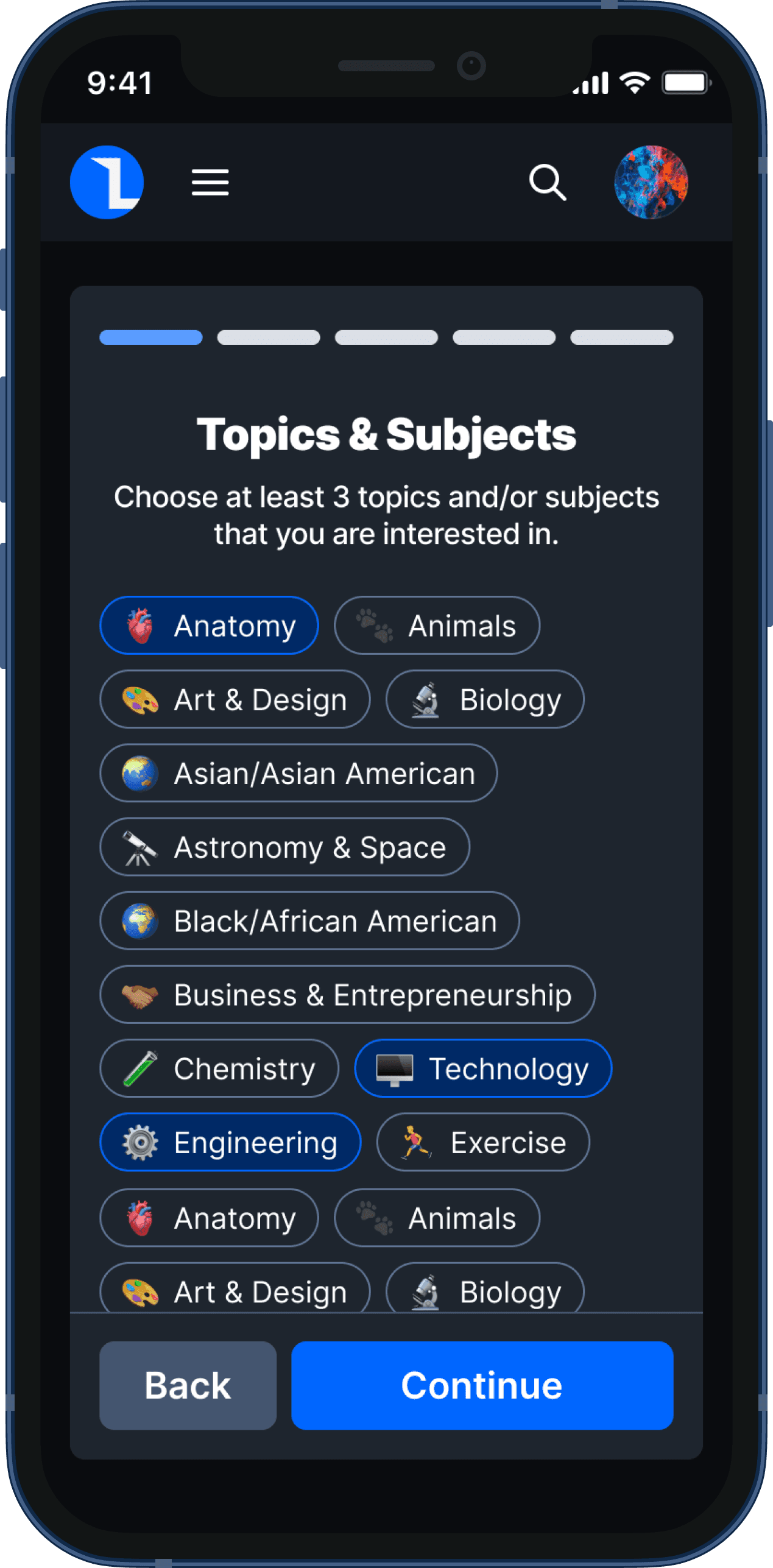

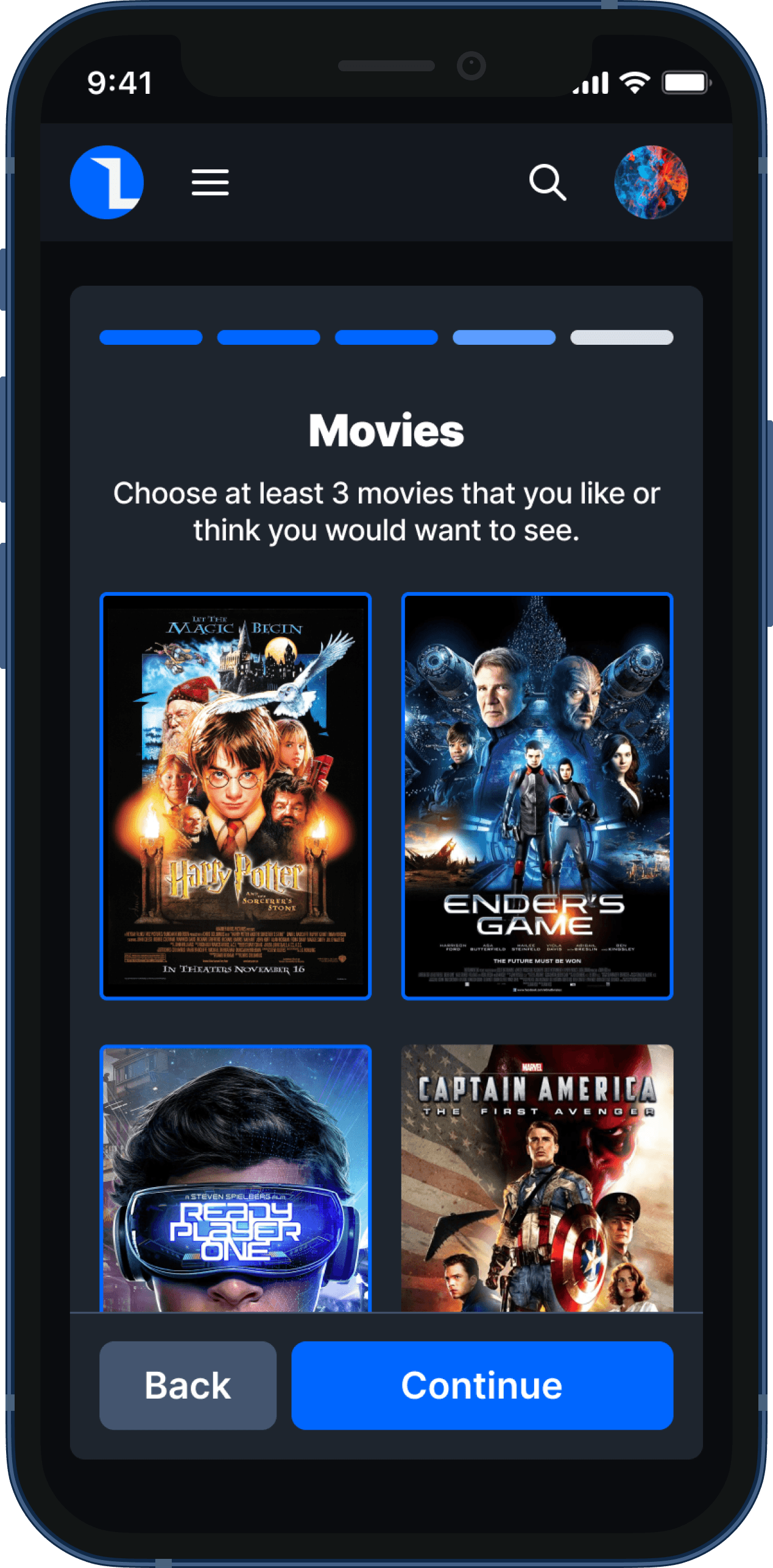

As we contemplated how to validate if this would really make a difference for users, we knew we couldn't create a non-code prototype of the experience. The only way to actually validate with users if this was doing the job for them was to build it and seek feedback from users. I designed a simple five question survey experience that would gather data about a reader's interests which we then fed into the OpenAI API. To make the build simpler and faster to complete we opted for building a mobile interface that would not scale up on larger devices but would still present well.

Mixed Reception Revealed Future Opportunities

The launch brought mixed results that informed our path forward. Teachers enthusiastically embraced the feature, seeing its potential to expand student reading horizons. Student reactions were more nuanced—while some appreciated the personalized recommendations, others remained focused on accessing popular titles. This feedback highlighted the need to balance innovation with meeting fundamental user expectations.